Would Your Board Sign Off on LTV from Raw Events?

A defensible LTV model your board can rebuild from first principles

If your board asked to rebuild customer lifetime value from raw event logs, would your numbers hold? Clicks, feature activations, renewal timestamps, every event is on the table. Most average based LTV models buckle under that pressure. They hide churn volatility, cohort differences, and behavioral nuance. When directors reassemble the math from the ground up, buried assumptions surface and trust evaporates.

The real test is simple. Can someone reconstruct your LTV step by step from raw events without surprises?

Lessons worth carrying forward

ARPU times average lifespan fails under event level review.

Survival analysis and incremental contribution capture real churn and revenue drivers.

Cohort funnels and predictive pipelines keep LTV tied to observed behavior.

Documentation and reproducibility are the foundation of board trust.

Why conventional LTV falls short

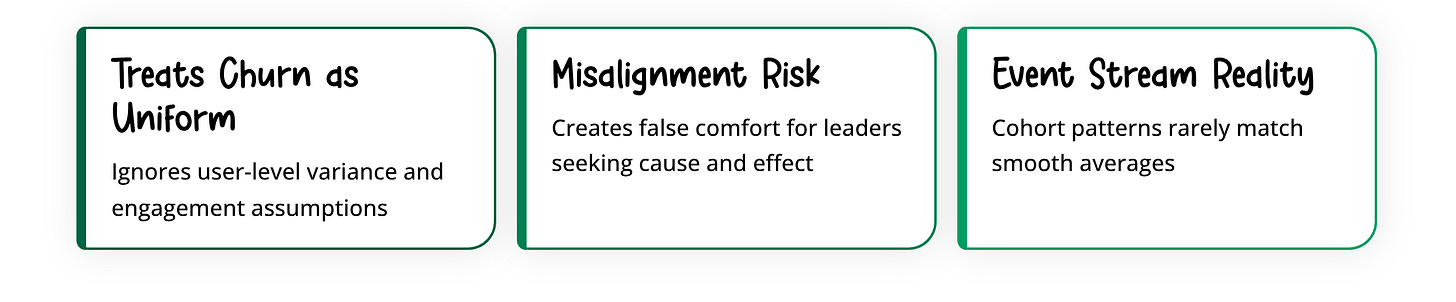

The common formula, average revenue per user times average customer lifespan, feels intuitive but fails an audit. It treats churn as uniform, ignores user level variance, and hides engagement assumptions. As Uriel Maslansky notes in Forbes, the narrow lens risks “misalignment with wider operational goals” and creates false comfort for leaders who want cause and effect they can verify. When LTV is recomputed from event streams, cohort churn patterns rarely match the smooth curve implied by averages. If the math looks too tidy, it probably will not survive inspection.

Survival analysis that models churn like time, not fate

Static churn rates assume the hazard of leaving is constant. Real customers do not behave that way. Survival models treat churn as time to event. Techniques like Weibull or Cox regression use actual histories such as onboarding completions, feature activations, and support tickets to estimate a survival curve for each user or segment. Strong.io reports this approach can reduce forecast error by up to 20 percent compared to exponential assumptions. That kind of measurable error reduction is exactly what earns board level confidence.

Incremental contribution beats averages every time

Revenue is not evenly distributed across events. A premium trial or referral click can create far more value than a routine login. Incremental contribution modeling, via uplift or regression, quantifies how specific events change downstream revenue. Driven Insights shows that combining first party event data with marketing touches reveals which interactions actually move LTV and which are noise. Averages blur strategy. Incremental contribution links choices to dollars.

Cohort based funnels expose hidden variance

Aggregates hide reality. Split users by acquisition channel, geography, or plan, then rebuild funnels from activation to adoption to renewal. Contentsquare reports that cohort funnels expose up to 30 percent variation in LTV drivers that aggregate models conceal. Directors can compare funnel drop off using raw counts, then reconcile revenue with the mechanics that produced it. That transparency turns debate into decisions.

Real time predictive LTV that updates with behavior

Monthly or quarterly LTV snapshots cannot keep up with shifting behavior. Stream raw events into a machine learning pipeline and refresh LTV continuously. As Oren Cohen explains, near real time dashboards build board confidence because they allow proactive course correction. If a specific cohort shows an early churn spike, it is visible within days, not months. Speed of insight becomes a control system, not a retrospective report.

Underused event signals that improve lift

First visit conversion. Early conversion patterns predict long term value better than ARPU in the first 30 days. See Monocle.io. “The Overlooked Role of First Visit Conversion in Lifetime Value.” January 13, 2025.

Micro event clustering. Group granular behaviors such as tutorial completions and community posts into engagement archetypes with distinct LTV multipliers. See Decipad. “A Better Way To Calculate LTV: 5 Alternative Methods for SaaS Startups.” September 26, 2024.

Open source summation scripts. Community SQL and Python in r/PPC help capture long tail revenue that benchmarks miss. See r/PPC. “Customer Lifetime Value.” August 29, 2019.

Make it board defensible with process, not just math

Turn LTV from a black box into a transparent system.

Document assumptions. Link churn distribution, discount rate, and event weights to specific raw metrics.

Enable reproducibility. Package SQL, notebooks, and sample datasets so anyone can rerun the pipeline end to end.

Visualize drivers. Overlay survival curves with actual churn events. Show cohort funnels and incremental lift charts.

Run sensitivity analysis. Demonstrate how a 10 percent change in churn or ARPU flows through to LTV. Highlight stable versus fragile scenarios.

Adopt continuous monitoring. Move from static decks to live dashboards that feed from event streams.

Simple average based LTV will not survive reconstruction from raw events.

Survival analysis, incremental contribution, cohort funnels, and real time predictions ground LTV in behavior.

Documentation, reproducibility, and visual diagnostics build trust when boards rebuild the number from first principles.

Action steps

Ship a baseline survival model, then back test against historical churn.

Build an incremental contribution model for the top five events that correlate with revenue.

Stand up one cohort funnel per major channel, then compare drop off and LTV.

Wire a daily refresh for predictive LTV, even if the first version is a lightweight model.

Publish a reproducibility pack with SQL, a notebook, and a sensitivity table.

Boards do not reject LTV because they dislike the metric. They reject it when the path from events to value is opaque. Make that path observable and the conversation changes from doubt to direction.

What is the one raw event that most surprised you when you linked it to LTV, and how did it change your roadmap?